Careful reading instead of skimming through CMS certainly could have prevented some of my postings in this thread, though it has produced some interesting side effects (: commuting matrices).

Absorbing some more undergraduate stuff could have prevented this posting as a whole, because these "bad complex functions" can be detected by checking out if the Cauchy-Riemann equations are valid for their real and imaginary parts. If such is not the case, then they are not holomorphic, which is a neater way to express the "bad" name calling.

Back to work:

- http://en.wikipedia.org/wiki/Cauchy-Riemann_equations

- http://mathworld.wolfram.com/Cauchy-RiemannEquations.html

- riemann.pdf (HdB)

Chapter 1. Power Series

Proof (Proposition 1.1) on page 12. Instead of having that function F(z,w) dropped out of the blue sky, let it be explained in excruciating detail:

Lemma: Sum(k=0,k=n-1) z^k.w^(n-k-1) = (z^n - w^n)/(z - w) for z <> w

Proof.

Let: f_n(z,w) = Sum(k=0,k=n-1) z^k.w^(n-k-1)

z.f_n(z,w) = Sum(k=0,k=n-1) z^(k+1).w^(n-k-1)

= z.w^(n-1) + z^2.w^(n-2) + .. + z^(n-1).w + z^n

w.f_n(z,w) = Sum(k=0,k=n-1) z^k.w^(n-k)

= w^n + z.w^(n-1) + z^2.w^(n-2) + .. + z^(n-1).w

----------------------------------------------------------------------

(z - w).f_n(z,w) = - w^n + z^n

Lemma: Sum(k=0,k=n-1) z^k.z^(n-k-1) = f_n(z,z) = n.z^(n-1) for z = w

Define: F(z,w) = Sum(n=0,n=oo) c_n . f_n(z,w)

Published in 'sci.math':

Theorem (HdB).

Any Smoothed Complex Series is an Entire Function.The meaning of this theorem is, in the first place, that we can effectively get rid of any radius of convergence. A smoothed complex series just converges everywhere. It also means that we can construct a complex series with random, but smoothed, coefficients and any such a series then converges everywhere as well. Random complex series with smoothed coefficients appear as smoothed but random closed curves, when restricted to arguments on the unit circle. That is because Complex Analysis is, in some sense: Fourier Analysis in disguise. See Fuzzyfied Lissajous Analysis for details.

Chapter 2. Preliminary Results on Holomorphic Functions

Published in 'sci.math':-

CMS: arbitrary too simple closed curves and

the like with self-intersection (easier) ,

with accompanying theory , executables & source , postings [ 1 ] , [ 2 ] in sci.math . - CMS: Cauchy's theorem applied correctly ?

- CMS: arbitrary too simple closed curves

Or rather:

Lemma 2.1. (ML Inequality) on page 18: L is the length of γ . But this can only be the case if γ is not, for example, a Space-filling curve, with "infinite" length L . Curves called rectifiable are mentioned, but not explained, in the book on page 16.

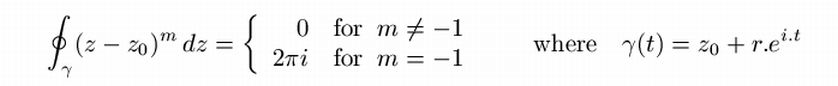

Example on page 20 shows that ∫γ

dz / z = 2π.i where γ : [0,2π] => C is γ(t) =

r.ei.t with real r > 0 .

Nice place to hook in and show that,

with the same closed curve (being a circle with radius r) :

∫γ zm dz = 0 or 2π.i and the latter

only for m = -1 , where m is an integer. Enhance and repeat:

So, indeed it is in some sense hust about the only way to get something

nonzero by integrating an analytic function with an "isolated singularity" over

a closed curve.

On the same page, it reads: we have not given a definition of what it means

for a point to lie "inside" a closed curve. In fact we will not be giving any

such definition. Everybody seems to be affraid of the Jordan curve theorem.

As a consequence of the paranoia, concepts which are intuitively clear - like

"inside / outside" - are rather dismissed as "undefined". Irritating

in the first place. And not quite neccessary as well. It makes content of CMS

more difficult to absorb. And it distracts attention from issues more relevant

than this.

Worse. Spending so much effort on the generality of (closed) curves needed to evaluate the complex line integrals, it sounds like a worthwile undertaking, but it isn't. The reason is the following. A theory called Lissajous Analysis shows that any closed smooth curve can be represented by a Fourier Series, which is the same as a Complex Laurent Series specified for the unit circle. The reverse is also true. Any complex Laurent series, when specified for the unit circle, represents a closed smooth curve. Actually it means that a line integral over an arbitrary smooth closed curve can always be converted into a line integral over the unit circle:

∫γ f(z) dz = ∫O f(γ(z)) γ'(z) dz

An argument remotely similar to the above is found in CMS on page 80 as Theorem 5.1 (The Argument Principle). Oh well, not even remotely, it seems ..

So what are we interested in? More complicated contours or complicated complex

functions? I wouldn't vote for the extreme and ONLY admit integrations over the

unit circle. But the above shows that a constraint to, say, simple closed curves

is very much reasonable. And that the reverse strategy - complicated contours -

impressive as it may seem, is in fact rather futile: a parametrization issue.

Mathematics can be too general for comfort. If the generalization of

a special result is more or less trivial, then show some restraint and go for

the specialization.

Example. Let γ(z) = zn with n ∈ N , then γ

is prototype of a curve winding around itself (winding number =) n times and:

∫γ dz / z = ∫O n zn-1 / zn dz = n ∫O dz / z = n 2 π i

Theorem 2.2 (Cauchy-Goursat: Cauchy's Theorem for triangles). Ullrich's

Proof on page 23 starts with "Suppose not." : thereby suggesting a proof

by contradiction. But only suggesting. The proof is actually a straightforward

one, i.e. a direct proof.

It is noted that the (quite ingenious) proof of Cauchy-Goursat uses the fact

that the absolute value of the complex derivative is maximal somewhere inside

the triangles. Such is for example not the case with the function f(z) = 1/z .

Thus the theorem need not to be valid for such a function (actually it

isn't). The reverse is not true. If a function is singular, then the integral

around the singularity can (easily) be zero.

Example.

f(z) = 1/z2 =>

∫|z|=1 dz / z2 = 0 .

On page 23 it also reads that you can get the conclusion from Green's theorem , i.e. for complex (thus real) differentiable functions within simply closed (Jordan) curves:

Also read Ullrich's comment on this treatment.Note. The Least Squares Finite Element Method for Ideal Flow can be interpreted as the Cauchy Integral Theorem applied to Numerical Analysis. Take a look at page 6 of the article when seeking for confirmation of this fact (Green's Theorem being the would-be real valued equivalent of Cauchy's).

Chapter 3. Elementary Results on Holomorphic Functions

analytic functions are locally nothing more than glorified polynomials.Published in 'sci.math':

- CMS: where are the zeroes?

With pictures [ 1 ] , [ 2 ] , [ 3 ] , [ 4 ] , [ 5 ] , [ 6 ] , [ 7 ] ,

while investigating the initial polynomial 1 + z + z2 / 2! + z3 / 3! + z4 / 4! ... + zn / n! of the entier function ez ,

with help of Rouché's Theorem (I love this Wikipedia source. Because now it has become my theorem as well)

Note. The fact that |ez| → 0 for |z| → ∞ and Re(z) < 0 explains why much of the region on the left is close to zero (: black) in the visualization.

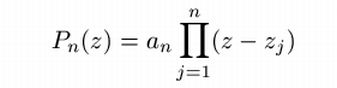

Corollary 3.7 (Fundamental Theorem of Algebra) on page 37. Quote:

for us it's just an amusing digression, at least for now. Back to work.

The following formula, expressing any complex polynomial in its roots, is even

between scaring parentheses:

Why oh why, this seemingly disdainful treatment of a most impressive result?

Has the author forgotten that this is the main thing Complex Analysis has been

invented for in the old days? A preliminary version of The Argument Principle

can be derived at this place. It's almost as if we have jumped into

Chapter 5. Counting Zeroes and the Open Mapping Theorem , with:

If we choose for example R = 1.5 , then the pole is inside the curve P(t) and because the winding number is 2 , the outcome of the integral = 2 = number of zeroes.

This line of thought seems to be remotely similar to the Sketch of a Proof by Birkhoff and MacLane. Other favorites are easily found at this website.

Theorem (remotely resemblant to the Picard theorems).

A polynomial of degree n assumes all complex values c exactly n times.

Proof.

an zn + an-1 zn-1 + ...

+ a2 z2 + a1 z + a0 - c = 0

has n zeroes.

A reasoning as in the last paragraph on page 40 of CMS could also be employed

for other "Special Functions" than f(z) = exp(c.z) : f(z) = c.z ; f(z) = c.ln(z) ;

f(z) = zc .

Chapter 4. Logarithms, Winding Numbers and Cauchy's Theorem

Chapter 5. Counting Zeroes and the Open Mapping Theorem

On page 80 of CMS we find Theorem 5.1 (The Argument Principle). On the Internet (Wikipedia), however, the Argument principle is not only about zeroes, but about zeroes and poles. An advantage of the latter is that it's easier to prove (the traditional version of) Rouché's Theorem. Insofar as the Proof of Lemma 5.2. on page 81 is concerned, a picture says more than a thousand words:And a thousand words are needed indeed, if people are driven paranoia due to inside / outside issues.

Theorem 5.4 (Rouché's Theorem). Suppose that γ is a smooth closed curve in the open set V such that Ind(γ,z) is either 0 or 1 for all z ∈ C \ γ* and equals 0 for all z ∈ C \ V , and let Ω = { z ∈ V : Ind(γ,z) = 1 } . If f , g ∈ H(V) and | f(z) - g(z) | < | f(z) | + | g(z) | for all z ∈ γ* then f and g have the same number of zeroes in Ω .

Also sprach David C. Ullrich. This author would rather say: let γ, V, Ω, z be as in the picture below and ..

Open mapping theorem (complex analysis)

Chapter 6. Euler's Formula for sin(x)

An overly complicated treatment that can easily be replaced by a page or two:- Euler-Wallis formula for sin(x) (HdB)

- and a picture of f(x) = x/tan(x)

Chapter 10. Harmonic Functions

10.6. Intermission: Harmonic Functions and Brownian Motion, page 200This rather Off-Topic one as a result from skimming, not reading:

Chapter 22. More on Brownian Motion

Off Topic

- JavE (ASCII art)

- A deceptively simple inequality

- Integral Conjecture

- Some recursive equation

- Question/Puzzle in the 4-D Space

- 2^y - 3^x

- Some weird maths

- Ladder on wall - derivation?

- Constructing pi

- A functional equation...

- When continuity of limit function implies uniform convergence?

- Logarithm of repeated exponential

- product of spheres

- Iteration square root of arcsinh

- Basic Economics and the Money Illusion

- Trisecting an angle

- DE for sine vibration

- Addition of infinities

- Summation by parts

- DOUBLETHINK IN EINSTEINIANA

- Question about the paper of Erdos and Selfridge

- NAIVE JOHN DOAN, SILLY STEPHEN HAWKING AND THE SPEED OF LIGHT

- Rejecta Mathematica

- Fibonacci

- NEW COSMOLOGY?

- THE SHORTEST HISTORY OF RELATIVITY

- If Attitudes Could Change Overnight the Recession Would Be Over In 24 Hours

- THE SHORTEST HISTORY OF THERMODYNAMICS

- Oblique torus slice: intersecting circles

- Number with gcd, a^p+b^p.

- An easy number theory problem

- A probabilistic problem on searching

- Pesky minimum problem

- History of Mathematics

- good algebraic approximations to pi

- Analysis with limit (2n-1)!

- Equal Sums of Like Powers for k = 1,3,5,7 (Feasible class project)

- Fourier transform

- Number theory problem

- POSTSCIENTISM: FALSE AXIOMS AND ABSURD AXIOMS

- Automorphisms of symmetric graphs

- testing Littlewood's conjecture with Phi and sqrt(2)

- Understanding the quotient ring nomenclature

- Schwarz Lemma qual question

- Stokes' Theorem exercise

- NAIVE JOHN DOAN, SILLY STEPHEN HAWKING AND THE SPEED OF LIGHT

- Distribution of the length of a random segment in an interval