≠

≠

≠

≠

≠

≠

≠

≠

The question of whether a computer can think is no more interesting than the question of whether a submarine can swim. - E. W. Dijkstra

I would like to start with the science of Mathematics. And my first question

is: which symbol is the most frequently used in mathematical formulas ?

I am pretty sure that it is the sign for "equality" or "identity"

= .

Now I think that it is impossible to develop Predicate Calculus,

as a part of mathematical logic, without the whole notion of identity.

But let us assume for a moment that it is possible nevertheless. Then we can

find a kind of mathematical definition in 'Principia Mathematica' by Russell

and Whitehead, chapter 'Identity ':

a equals b means that, for all properties P:

P is a property of a if and only if

( a = b ) :<-> ∀P: P(a) <-> P(b) .

This sounds reasonable. Consider, however, the expression ' 1 = 1 ' .

Then, the ' 1 ' on the left of the equality sign is not equal to the ' 1 ' on

the right of the equality sign, because the property 'being on the left'

is obviously not equivalent with the property 'being on the right'.

Therefore we conclude to a paradox: 1 <> 1 .

P is a property of b .

Therefore

Identity is not properly defined by the logic in 'Principia Mathematica'.

But now we are in trouble; I have never seen another valid definition of

Equality, the equals sign ' = ' , the most important mathematical

concept in existence !

This is not the end of the story, though. So called "Equivalence Relations" are

supposed to share the following properties:

a ≡ a ; a ≡ b -> b ≡ a

( a ≡ b and b ≡ c ) -> ( a ≡ c)

Quoting a (Dutch) textbook, equivalence relations should be conceived as

a "generalization" of "ordinary" equality. But tell me: how can something

be generalized, if it is not even properly defined itself ?

Time has come to give my own opinion.

There is no such thing as an "absolute" identity. Every identity is only in

some or in many respects. Equivalence relations cannot be distinguised, at all,

from "ordinary" equalities. Just replace ' ≡ ' by ' = ' . What's in

a word anyway ?

Distinguishing "equivalence relations" and "definition by abstraction" from

"common identity" and equality are merely good examples of how to make

mathematics unnecessarely complicated.

John Franks:

Consider, the expression

Hans de Bruijn = Hans de Bruijn

Then, the Hans de Bruijn on the left of the equality sign is not equal

to the one on the right of the equality sign, because the property

'being on the left' is obviously not equivalent with the property

'being on the right'. Therefore we conclude the original poster must

have been (at least) two people.

Doug Merritt:

The spot where you went wrong is in considering 'being on the left / right'

to be valid predicates, for in terms of algebra, they are not. In fact,

this is a specific instance of a very general confusion about mathematical

systems, which is that the language used to describe a mathematical

system generally does not follow the same rules as the system being

described.

Formal axiomatic systems (the ultimate in mathematical rigor) use a meta

language to describe the language of the system being set up.

This dual language system has been explored very extensively, and there

are some very interesting results, such as the impossibility (I believe

it's been proved) of using a single meta language to define both itself

and the usual axiomatic systems of analysis.

Richard O'Keefe:

Do we have to drag out the type / token / quotation stuff all over again?

In the equation

1 = 1

^ ^ ^

a b c

there are three parts, labelled a, b, and c. a labels a token belonging

to the type '1', b labels a token belonging to the type '=', and

c labels a token belonging to the type '1'. a and b clearly label

different tokens (as de Bruijn observes), but the equation is not

mentioning those tokens but using them to refer to the number 1.

If de Bruijn's argument were valid, we would never be able to talk

about anything at all. (There used to be another occurrence of that name

in this sentence but my deleting it didn't delete de Bruijn himself.)

Okay, that's fair enough.

Of course, I have been well aware of this "confusion" between the tokens

and the meaning of the tokens. But let's carefully rephrase from the

'Principia ':

( a = b ) :<-> ∀P: P(a) <-> P(b) .

Am I blind or what ? Did nobody read the ' ∀ ' in that statement ?

I did nothing else that take that forAll quite litterally, just as

it is meant - without doubt - by the authors themselves.

And now everybody comes to me and says that it is All, but except being

on the left, except the brand name of the ink, except the font

of the letters used, except the rank in the alphabet. With other words:

except almost everything ! Therefore it is not a forAll, at all !

My point is this. I wouldn't have made any

trouble, if Russell and Whitehead (and many mathematicians) would have been

humble enough to recognize that there simply does not exist such a thing as

"all properties ". A definition like the following would have been

more than sufficient, instead:

( a = b ) :<-> Some P: P(a) <-> P(b) .

Now give me a enumeration of any properties that you want to be allowed for the

purpose of identifying the "meaning" of the symbols used (: Pattern Recognition

techniques ?) And I'll be no longer your opponent.

Useful remark: the set of

Properties needed for Identification basically will be finite. Further

thinking along these lines reveals that:

Identity <> One and only

With other words:

being Identical is not the same as being One and only one.

Seven is seven. But: seven apples is not the same as seven pears. Zero is

zero. But: having no bread is not the same as having no water.

It goes much

deeper than this, though.

In quantum mechanics, there is the concept of

Identical Particles. Quoted without permission from another website:

One of the fundamental postulates of quantum mechanics is the essential

indistinguishability of particles of the same species. What this means, in

practice, is that we cannot label particles of the same species: i.e., a proton

is just a proton; we cannot meaningfully talk of proton number 1 and proton

number 2, etc. Note that no such constraint arises in classical mechanics.

But the latter is not true. Suppose that we have two identical pictures on

a web page, as implemented by:

<TR>

<TD WIDTH=410><IMG SRC="zoeken01.jpg" WIDTH=400></TD>

<TD WIDTH=410><IMG SRC="zoeken01.jpg" WIDTH=400></TD>

</TR>

Then the situation is exactly analogous as with the identical particles in QM.

If the pictures are labeled, then we cannot meaningfully talk of picture number

1 and picture number 2. Suppose, namely, that the picture on the left is labeled

as 1 and the picture on the right is labeled as 2. Exchange the two pictures.

Since the pictures are identical, it's impossible to tell whether they are

interchanged or not. Yet there are two pictures instead of one.

They are identical, but they are not one and only one thing.

The above has immense consequences for mathematical reasoning. As an example,

let's recall the following theorem: there exists one and only one empty set.

Which is not true. Actually, there do exist many empty sets. And they are all

identical. But they are not one and only one set.

Another example, with more profound consequences, is the set of all naturals.

All sets of natural numbers are identical. But this does not mean that there

is only one such a set. Another way of looking at this is that the mathematical

abstraction of the set, or maybe rather the class of all naturals, has

many instances, to speak in terms of Object Oriented Programming. Let

us reconsider now the theorem that the set of all natural numbers has the same

cardinality as a proper subset of itself, namely the set of all even

natural numbers. This is commonly visualized as follows:

1 2 3 4 5 6 7 8 9 10 11 12 ...

2 4 6 8 10 12 14 16 18 20 22 24 ...

Sie wissen das nicht, aber sie tun es (: Karl Marx). They don't know

it, but they do it. It is seen here that the set has been splitted already in

two: (1) the set of naturals and (2) the set of even naturals. This has

as a consequence that the set of even naturals is no longer considered as

a subset of the set of all naturals.

Thus maybe the set of all even naturals

is a proper subset of the set of all naturals, but it is no longer considered

as such. Herewith, a highly counter-intuitive aspect of Cantor's theory of

Cardinals is removed, a great deal. Because now it says that there exists a set

of all naturals. And another set of even naturals. And there is an equivalence

relation between these two sets; therefore they have the same cardinality. The

set of even naturals is just the set of all naturals with a "re-named" unit

element for counting, called 2 instead of 1.

Παντα ρει,

ουδεν μενει

(Panta rhei, ouden menei), to be translated as:

"Everything flows, nothing remains" (Heraclitus of Ephesus, 540-480 BC).

Thus one might even argue that there isn't a thing which is one and only one.

Let time be attached as a label to anything. Then it is obvious

that anything is changing with time. Meaning that anything can always be

considered as arbitrary many identical instances. When it comes to

(re)conciliation of Mathematics with Physical Reality, I think this is

one of the main issues to be considered. The key concept being that the

mathematical abstraction is like an OOP class - quite close: it's a set, anyway.

And physical reality is like a bunch of instantiations of that class.

ZFC Killer Axiom

As a matter of Natural Philosophy,

we have established that, if x is a member of X , then certainly there is not

a physical reason why x should not be, at the same time, a part

(i.e. subset) of X . Since I am a physicist, not a mathematician, I cannot

even imagine an element which is not a subset as well. In addition,

being a part

of something always seems to be more general than being an element-ary

part of something. Can someone please stand up and

explain me how to acquire a measuring device that can ever distinguish a single

x from the set { x } containing that single element x ? I am rather certain that

no such a device can be found in the entire Universe ! Therefore the following

additional axiom (11) for

Zermelo-Fraenkel

Set Theory (ZFC) will be proposed:

∀ x : x = { x }

In words: the box {} is not a part of the set. An apple cannot be distinguished

from the set which is containing only that apple as a member.

But anyhow, with the 11th axiom, it immediately follows that:

∀x,X : (x ∈ X) ⇒ ({x} ⊆ X) ⇒ (x ⊆ X)

With other words - take my advice - people should better:

Look what I am doing. Being a civilized debater, I whole-heartedly agree with those great achievements of Set Theory ;-) I have embraced all 10 axioms of ZFC. But as a physicist, unfortunately, I have to build theories which are in close agreement with Physical Evidence. Therefore I was forced to augment the ZFC system with that tiny additional axiom. The resulting system could be called "ZFC augmented" or: ZFC+ . I am very well aware of the fact that ZFC+, due to its 11 axioms instead of 10, will yield less valid theorems than ZFC. (Geez, did I do that on purpose ?) Provided that our system is consistent - because if such is not the case, there will be no theorems at all ! If you cannot accept these terms, you'd better quit this forum, here and now. For the rest of us, the time has come to lean back and see what happens if the 11 axioms of ZFC+ interact with each other.

Oh well, this has become a much more dramatic excercise than I first thought.

A (now obsoleted) web-page has given rise to a heated debate about x = { x } in sci.math.

Jesse F. Hughes

and Chas Brown proved the following, with common set theory: there is only

one set in my ZFC+ universe.

Lemma. ∀ x , y : ( y ∈ x ) ⇔ ( y = x )

Proof (as quoted from the thread).

Roughly speaking, (Ax) x = {x} implies that if y in x, then also y in

{x}; but {x} is a set with only one element, so it follows that we must

have y = x.

The above uses the standard axiom that says that two sets are equal if

and only if they have exactly the same members; i.e. the axiom that

states that (Ax)(Ay) ((x=y) <-> (Az) (z in x <-> z in y)).

Cheers - Chas

The latter remark by Chas may be essential.

Weak pairing) (A x)(A y)(E z)(x in z & y in z)

Now, let's see what we can prove with that plus Han's Brilliant

Discovery that x = {x}, rewritten with definitions expanded.

(HBD) (A x)(A y)( y in x <=> y = x )

Put those together and what happens?

Let x and y be given and let z be a set containing both x and y (from

weak pairing). Applying HBD, we see:

x in z <=> x = z and y in z <=> y = z.

But since x *is* in z and y *is* in z, we see that x = z = y, and thus

x and y are equal.

Hence, weak pairing + HBD proves that there is only one set in the

universe.

Thus ZFC+ contains only one set. But it gets even worse. As quoted from the same

article:

It is trivial to prove that augmenting ZF with (A x)x = {x} is

inconsistent. First, prove that the empty set exists -- a trivial

theorem in ZF. Then we have by your axiom that {} = {{}} and hence {}

is an element of {}. But by definition of {}, we also have {} is not

an element of {}.

Thus there exists only one set, which then must be the empty set, because we

can prove in ZFC that the latter exists.Thus x = { x } indeed blows up

the whole of ZFC ! That's why we shall call x = { x}

the ZFC Killer Axiom.

Physicists would say that ZFC is a highly unstable system, since it

already

blows up when a seemingly innocent change in its foundations is being made.

Blows up !? Han, what are you doing !? What I am doing ? I'm doing just

Physics !

The fact that the eleventh axiom is according to physics and

that ZFC plus that eleventh axiom shrivels into nothingness doesn't hurt me

so much, since the alternative would be something much worse. Leaving these

mathematical systems intact simply means allowing that anomalies w.r.t. the

real world certainly are to be expected. Provided that x = { x }

is a sensible axiom indeed, physically speaking, then we have shown that ZFC

is contradictory to real world experience.

But this would mean that the Axioms of ZFC may be contradictory to the Laws

of Nature. Hence the foundations of mathematics may be incompatible with the

foundations of physics. Therefore: No Mathematics (with ZFC) XOR No Physics.

If this is really our choice, then I would say: make up your mind !

Even more shocking is that the blowup occurs at such an elementary level. As Jesse F. Hughes has analysed correctly:

even though it is inconsistent with the minimal subtheory of ZF defined by: (1) Pairing axiom: for any x,y, there is a set containing just x and y. (2) Non-triviality condition: There are (at least) two distinct sets.

Note that { x } is a set such that (a) x is in { x } and (b) if y is

in { x } then y = x. This is what { x } *means*.

Let x and y be distinct (by (2)). Form the set {x,y} (by (1)). Then

by your axiom {x,y} = {{x,y}}. By (1), x is in {x,y} and hence x is

in {{x,y}} (Note: this is not an application of extensionality, but

just the logical axiom of substitution.). Similarly, y is in

{{x,y}}. But by (b), we see that x = {x,y} and y = {x,y}, so x = y.

With other words, if we replace the abstract universe by a universe of bullets:

Last but not least, I apologize for eventually having (ab)used the mental capacity of my fellow debaters in 'sci.math', for the purpose of breeding my own offspring.

As quoted from The Universe of Discourse: Frege and Peano were the first to recognize

that one must distinguish between x and {x}.

The proposition " a is a member (or element) of A "

is denoted as: a ∈ A .

But, for example,

if F := { S , T } , S := { a , b , c , d } , T := { a , c , e } , why then

are S and T elements of the "set" F ?

And, on the contrary, if x is a member of X , then certainly there is not a

physical reason why x should not be, at the same time, a part (i.e.

subset) of X . Since I am a physicist, not a mathematician, I cannot even

imagine an element which is not a subset too. In addition, being a part

of something always seems to be more general than being an element-ary

part of something. Being a subset seems to be more general than being a member.

That is, we are tempted to conjecture the following theorem:

MEMBERS = CLASSES

Now it's a well known fact that partitions are closely related to

equivalence relations.

And the members of a set are closely related to "ordinary equality". Meaning

that the above proposed system is quite consistent in this respect: just read

the paragraph about Mathematical Identity again.

An example from group theory:

Different rotations, say about 45, 90, 180, 360 degrees can be taken

together to the general class of rotations. But these rotations are

themselves already "defined by abstraction": rotations carried out in

Eindhoven or New York, taking an hour or a second, done by me or by

Jan Willem Nienhuys.

Members = Classes

Let's consider Set Theory as it still is nowadays. What is the definition of a

set ? According to Georg Cantor, the founder of set theory himself, a

set is:

Eine Zusammenfassung von bestimmten wohlunterschiedenen Objecten unserer

Anschauung oder unseres Denkens (welche die Elemente genannt werden) zu

einem Ganzen.

Thus Set Theory is based upon the "primitive notion" that something can be an

element / a member of a set. A set is defined by its elements / members.

Let A and B be sets. The proposition

" A is a subset of B " is denoted as: A ⊆ B .

We are ready now to "understand" the following transla(ted quota)tion from

a (Dutch) mathematics text book:

Essential for the theory of sets is the distinction between set and

element. Never mix up ⊆ and ∈ , a and { a } .

(S.T.M Ackermans / J.H. van Lint, 'Algebra en Analyse', Academic Science,

Den Haag, 1976)

Since S and T have the elements

a and c in common: so they are not "wohlunterschiedenen Objecten",

if we strictly adhere to Cantor's description.

∀x,X : (x ∈ X) ⇒ (x ⊆ X)

But leave this for a while as it is.

And let's continue rephrasing standard knowledge. The empty set is denoted as

Ø .

If A is a set, then the set of so called classes

{ x,y, ... }

is called a partition of A , iff the following conditions hold:

Considering partitions, what do you think of the following "analogy":

a partition is completely determined by its classes.

It is thus clear from this presentation that there is no "essential"

difference between the classes in a partition and the elements in a set.

In mathematical terms: elements and classes are isomorphic.

In plain English: classes and elements denote the same kind of thing.

90o rotation = 360o rotation

(: equivalence class)

is not essentially different from:

90o in Eindhoven = 90o in New York

(: identity)

Elementary Partitions

We can think now of a Top-Down approach to Set Theory, instead of the usual

bottom-up approach. In many cases, Cantor's "primitive notion" of the members

in a set is not a relevant concept anyway. It has been established in practice

that a kind of "set theory without the elements" is perfectly possible. Support

for such a set theory - void of "elementalism" - is found, for example, in

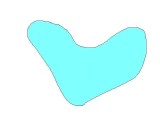

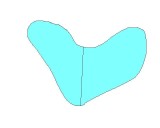

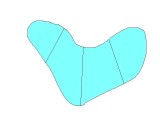

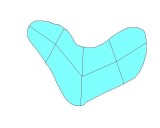

'Constructive

Solid

Geometry

The top-down approach has to be initialized with the axioms of Boolean Algebra, instead of ZFC. So let's start with Boolean Algebra. In order to enable the bootstrap, some rudimentary (naive) set theory may be assumed as well (as in the MathWorld page), but it is is not strictly necessary. Anyway, here goes.

A Boolean Algebra is defined for objects x , y , z , ... together with two binary operations ∩ and ∪ and a unitary operation ' such that it satisfies the folowing axioms:

Since in our Top-Down approach there are no elements yet, we cannot speak

about a Set, let it be about its Members. The term "Boolean Object"

is suggested instead, for a "set without members".

In order to furnish Boolean Objects with the properties of a "real" set,

we first have to establish what "being a part of" means.

The following: (A ⊆ B) ≡ (A ∩ B = A) .

Or equivalently: (A ⊆ B) ≡ (A ∪ B = B) .

Here the right hand sides are defined by the axioms of Boolean Algebra

for the objects A and B.

Hereafter, being a Partition is defined in the same way the mainstream theory of classes and partitions is set up.

Definition. A partition of a boolean object V is a (naive) set U of boolean objects W with the following properties:

≠

≠

≠

≠

≠

≠

≠

≠

No matter how you subdivide that piece of cake, it remains the same piece of

cake. But the partitions are different.

Or: 9 = 5 + 4 = 3 + 3 + 3 = 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 .

It's still the same number, but differently composed every time.

Let's proceed. Once upon a time, Euclid said the wise words: a point is what has no part.

And who am I to deny it.

Definition. A boolean object is called a point iff there

doesn't exist another boolean object being a proper part of it.

That is,

if E is a point then there doesn't exist any P with P ⊂ E

and vice versa.

Definition. An Elementary Partition (EP) is a partition where all

members are points.

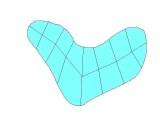

Example. Another basic axiom of Boolean Objects could be that there exist at least some of them. Let these objects be named a , b . And let there be no other objects in our universe of discourse. Theorem : then { a' ∩ b , a ∩ b' , a ∩ b } is an elementary partition of a ∪ b provided that a' ∩ b ≠ Ø ; a ∩ b' ≠ Ø ; a ∩ b ≠ Ø .

Proof:

| a' ∩ b = Ø | a ∩ b' = Ø | a ∩ b = Ø | Elementary partition of a ∪ b |

| False | False | False | { a' ∩ b , a ∩ b' , a ∩ b } |

| False | False | True | { a , b } |

| False | True | False | { a , a' ∩ b } |

| False | True | True | { b } |

| True | False | False | { b , a ∩ b' } |

| True | False | True | { a } |

| True | True | False | { a } = { b } |

| True | True | True | Ø |

The above procedure of forming

minterm elements as the points in an elementary partition can be

carried out with any number of Boolean Objects.

The theory of minterms is employed extensively with the design of logical

devices. A lucid representation of them is the so-called Karnaugh Map.

An excellent exposure (and much more) can be found in:

H. Graham Flegg, 'Schakel-algebra', Prisma-Technica, translated from:

Boolean Algebra and its Application,

Blackle & Son Ltd. London and Glasgow 1965.

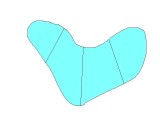

Example. Let U and V be two different partitions of the same set A ,

where U = {a,b} and V = {c,d} . And the only boolean objects given are in

{a,b,c,d}.

Question: What is the elementary partition E of A ?

Answer: E = { a ∩ c , b ∩ c , a ∩ d , b ∩ d } , provided

that these members of E are non-empty.

Example. The elementary partition E of an arbitrary set

A = { 1 , 2 , 3 , 4 , 5 , 6 , 7 , ... } is given by

E = {{1},{2},{3},{4},{5},{6},{7}, ... } .

If x is a member of A : x ∈ A , then {x} is a subset of A :

{x} ⊆ A , but {x} is a member of E : {x} ∈ E .

Example. The set X itself is a member of the trivial partition T of X ,

consisting of the set as a whole : T = {X} .

An EP set X can be an element of itself, but only if the trivial partition

{X} of X is considered. Then X ∈ X and X = {X} .

If the trivial partition of X is excluded, then X can not be an element

of itself (: cleans up Russell's paradox).

(Note. The resemblance between a set and the trivial partition, associated

with it, explains much of my "confusion" :-)

Example. An "ordered pair" (a,b) may be defined in common Set Theory by

{{a},{a,b}} .

Such an ordered pair is not a partition of any set, because

two different elements in a partition should have nothing in common. But:

{a} ∩ {a,b} = a .

Theorem.

An "ordered pair" (a,b) can not be defined by {{a},{a,b}} ,

because two different elements in an EP set should have nothing in common.

Proof: {a} ∩ {a,b} = a ∩ (a ∪ b) =

(a ∩ a) ∪ (a ∩ b) = a ∪ Ø = a and a

is non-empty by definition.

Example.

The Power Set of any set A is not a partition of A . Theorem.

The Power Set of an EP set does not exist.

Proof for a two element set A = {a,b} : in {Ø, a, b, {a,b}} the

first element is empty and the last element is not disjoint with the

middle {a,b} .

Non-existence of the Power Set wipes away the

Cardinality of the Reals

and the Continuum Hypothesis, when founded upon EP sets eventually.

Example.

Finite Ordinals are defined in standard set theory by putting more and more

curly brackets around the empty set,

like in:

1 = {Ø} , 2 = {Ø,{Ø}} ,

3 = {Ø,{Ø},{Ø,{Ø}}} , ...

But none of these sets is a partition of any set.

For example with 3 = {Ø,{Ø},{Ø,{Ø}}} :

the first element is empty, and the second and the third element have the

member Ø in common.

Thus

Finite Ordinals can not be defined in EP set theory in this manner.

We are ready for another Theorem in the elementary partitons theory:

∀ a,A : (a ∈ A) ⇒ (a ⊆ A)

Proof: a ∩ A = a ∩ (a ∪ x ∪ y ∪ z ...) =

(a ∩ a) ∪ (a ∩ x) ∪ (a ∩ y) ∪ (a ∩ z) ... =

a ∪ Ø ∪ Ø ∪ Ø ... = a .

Which by definition is the same as: a ⊆ A .

Theorem (ZFC Killer Axiom) :

∀ x : ( x ≠ Ø ) ⇒ ( x = { x } )

Proof: x is non-empty, does not intersect other parts, fills up the

whole of x .

Conclusion. The axiom that kills ZFC doesn't kill some other set theory.

Therefore EP set theory is not even consistent with a finitary part of

common set theory.

Example. Consider the EP set { a , b , c } . Then

{ a , b , c } = { { a , b } , c } = { a , { b , c } } = { { a , c } , b } .

Proof: by definition a , b , c are all disjoint and non-empty.

Therefore

the part { a , b } is disjoint from c ,

the part { b , c } is disjoint from a ,

the part { a , c } is disjoint from b .

All these parts are non-empty and fill up the whole EP set.

Remark. This example clearly shows the difference between common

set theory and Elementary Partitions set theory concerning membership.

Essential for the theory of sets is the distinction between set and

element. Never mix up ⊆ and ∈ , a and { a } .

(S.T.M Ackermans / J.H. van Lint, 'Algebra en Analyse', Academic Science,

Den Haag, 1976)

But ..

an object is not uniquely defined by its members. There are many

ways to partition an object into elements and define it to be an EP set.

The stone which the builders rejected, the same

is become the head of the corner (Matthew 21:42).

Boolean Algebra and all of its laws are valid for EP sets, provided that

all of the members in the universe of these EP sets are either disjoint

or equal.

Then (and only then)

we can form { a , b } ∪ { a , c } = { a , b , c }

or { a , b , c , d } ∩ { p , a , c , q } = { a , c } . And safely

apply all the laws of Boolean algebra. No miracle, because EP set theory is

essentially the same as Constructive Solid Geometry, which is the geometric

(counter)part of common set theory.

The Physics of Infinity

An interesting question is if actual infinities could arise in the physical

world. Actually, they do in our physical theories. Quantum field theory

has to deal with infinite numbers and "renormalize". Cosmology is even more

abundant of infinities. How about Black Holes ?

And the Great Singularity,

which is assumed to be at the Origin of the whole Universe ? Whew !

But serious now:

"... dass ein Physiker überrasht sein sollte, wenn er Phänomene in

der Natur vorfände, in deren Beschreibung das Wort Unendlich nicht

durch das Wort sehr gross ersetzt werden dürfte."

(: Carl Friedrich von Weiszäcker).

Of course, there is no physical evidence that infinities actually do

exist. Actually, there is physical evidence that actual infinities do

not exist. I have done my best to collect some substantial, physical,

empirical arguments. Most of these are certainly not my own.

(Remember !)

So far about the infinitely large things. How about the infinitely small ?

> In the rest frame of the electron, the least possible error in the measure- > ment of its coordinates is: > dq = h/mc.Here q=position, h=Planck's constant, m=electron mass, c=velocity of light. If you try to measure the position of an electron, you must send for example a photon to it. The electron is disturbed by this photon, which is known as the Compton effect. As a consequence of this, there is a definite bound on the accuracy with which you can locate the electron. This is expressed by the above formula. Any attempt to locate the electron within this interval is "severely punished" by:

> ..... the (in general) inevitable production of electron-positron pairs in > the process of measuring the coordinates of an electron. This formation of > new particles in a way which cannot be detected by the process itself > renders meaningless the measurement of the electron coordinates.

> Einstein's clocks were supposed to emit extremely short signals and to > measure accurately time intervals between signals emitted and received. > In a word, an Einstein clock was a radar system, and its requirements > were thus very different from those of a frequency standard. ...The modern atomic clocks are referred to by the above "standard".

dt = h/mc^2 (t=time)It thus is definitely impossible to measure (electron) time more accurately than this amount, due to the same Compton effect as in (2).

* Han de Bruijn; Software Developer "A little bit of Physics would be (===) * SSC-ICT-3xO ; Landbergstraat 15, NO Idleness in Mathematics" (HdB) @-O^O-@ * 2628 CE Delft, The Netherlands. E-mail: J.G.M.deBruijn@TUDelft.NL #/_\# * http://hdebruijn.soo.dto.tudelft.nl/www/ Tel: +31 15 27 82751. ###

Sad Remark. When I say that Mathematics should be founded on Physics, then I mean "common" physics. I am not quite reluctant to accept "advanced" physics - like elementary particle physics or cosmology - as truly scientific. I'm a bit ashamed that these nowadays areas of interest seem to belong to physics anyway.

QED suffers from its infamous divergencies ever since it came into existence. People like Richard P. Feynman have devised some dirty "renormalization" tricks, in order to cure the very worst symptoms of this "infinity" disease. QED nowadays gives some of the desired answers. But, as Feynman himself points out in the famous "Feynman's Lectures on Physics" (part II), the difficulties already showed up in classical electromagnetic theory. The self-energy of point charges is infinite. Electrons cannot even move, if "actual infinities do exist" !

Physical theories can - and will - suffer from an inadequate foundation

of their mathematics, I think. People should have the courage to postulate the

following as their working hypothesis.

Basic axiom: EVERYTHING IS FINITE

=================================

Challenge to everyone:

Give me one valid counter-example: a piece of evidence that the Infinite

is actually useful in physics, in a theoretical or practical sense (independent

of wishful mathematical thinking, of course).

1/λ = R(1/m^2-1/n^2)More details are found in this article from the 1989 sci.physics thread.

p.V = n.R.TConsequently, the theory of an ideal gas also predicts infinities. As soon as its volume V approaches zero (at a given room temperature T ) then the pressure p will raise to infinity. Every physicist knows, however, that such is not the case. For a manyfold of reasons. The most important being that a real gas does not exactly behave according to the laws for an ideal gas, certainly not for high values of the pressure. Instead of this, it will be subject to a change of state: it will become a fluid in the first place. And it even may become a solid, if pressure continues to squeeze it to still lower values of its volume.

The infinities associated with the ideal gas law are due to idealization

of the real gas behaviour. They disappear if the natural gas is modelled

more accurately. That is: as soon as mathematical models become more realistic.

If infinities are likely to occur within the realm of certain physical laws,

then the matter subject to these laws will change state, in such a way

that infinities are avoided. This effectively means that such "laws"

will be no longer valid.

Suppose you have a giant vase and a bunch of ping pong balls with an integer written on each one, e.g. just like the lottery, so the balls are numbered 1, 2, 3, ... and so on. At one minute to noon you put balls 1 to 10 in the vase and take out number 1. At half a minute to noon you put balls 11 - 20 in the vase and take out number 2. At one quarter minute to noon you put balls 21 - 30 in the vase and take out number 3. Continue in this fashion. Obviously this is physically impossible, but you get the idea. Now the question is this: At noon, how many ping pong balls are in the vase?And here come some answers, according to Mainstream Mathematics.

>At noon, how many ping pong balls are in the vase?

None.

there are no balls in the vase at noon.

So the vase is empty at noon.

At noon the Vase is empty.

That is a proof that the vase is empty. [ ... ] no ball is present at noon.

surely the vase is empty.

Every ball placed into the vase (at a well-defined time before noon) is taken out from the vase (at a later, well-defined time before noon).Virgil:

Which balls that are inserted into the vase before noon fail to be removed before noon. Name one!

So each time you add more balls to the vase (10) then you take out (1), and that gives him an empty vase? Not in my universe...

the vase is definitely not empty.

According to this problem, noon is not reachable.

Well, first of all, there is no consensus in this thread; there are three camps: one camp says that there will be zero balls in the vase at noon, another camp says that there will be an infinite number of balls in the vase at noon, and the third camp says the answer is indeterminate.OK. Time to make up our mind. Who is right and who is wrong ?

The good news is that

there is no sensible disagreement between all of the debaters about

the timestamps between 1 minute before noon and just before noon.

We can say that the number of balls

(picture) Bk at step k = 1,2,3,4, ... is:

Bk = 9 + 9 . ln( -1/tk ) / ln(2) where

tk = - 1/2k-1 for all k ∈ N .

But, within this formula, there is no timestamp at noon. Because this would

involve k = ∞ , which is nonsense in any respect.

I have marked the following poster by rennie nelson as exceptionally right to the point:

[ ... ] . There is no noon in the problem as written, every event happens before noon. The universe of the problem is not the same as the universe of the question. No amount of reasoning or deduction will overcome this.The bad news is that mainstream mathematics nevertheless claims to have a solution at noon and says that the vase must be empty then.

From a rational point of view, this must sound like a true miracle. It strongly reminds of the way all kind of superstition beliefs are still going strong, despite their manifest lack of scientific content. So there must be something out there which is even more convincing than logic. Before going into details, let me tell you that a satisfactory explanation - according to my not so humble opinion - can be found only iff people dare to recognize that Mathematics, too, is just a humble activity of human beings. There is nothing heavenly about mathematics. At least, it is no more divine than for example composing music or writing that godly book. This implies that mathematics is bound to historical and social restrictions, in the very first place. Yes, I want you to get rid of the idea that "Mathematics is independent of society" !

Something out there must be more convincing than logic. Let's see what it is. How can somebody conceive the idea that the whole of mathematics is made up from nothing else but Sets ? This has only become possible because society itself has adopted the shape of an "ungeheure Warensammlung" (unprecedented collection of goods: Karl Marx in 'Das Kapital'). Is it a mere coincidence that the birth of Set Theory has its social analogue in the enormous accumulation of all kinds of goods, which marks the turn of the century (1900) ? Is it a mere coincidence that Georg Cantor's father himself was a merchant ? So his son became very familiar with those huge "sets" in the storehouses of his family.

So we may conclude in the first place that the birth of Set Theory was inspired by social circumstances. But this is not the end of the story. Even nowadays, nobody can think of an idea which better fits the view of the Capitalist System on society. Go to a supermarket, and convince yourself ! It all means that rational arguments are not good enough, in order to deprive Set Theory from its predominant role in mathematics. Read my lips: I don't want to get rid of Set Theory as a whole, I only want to deprive it from its predominant position.

Mathematical concepts originate and become important within the context of our human society, with all its non-logic and non-scientific taboos. But it is also thinkable that certain concepts will not originate in the given social circumstances, simply because such new concepts would have unacceptable economical and political (and personal) consequences. New ideas will not come, essentially because, deep in our heart, we don't want them. So yes, further progress in mathematics may be inhibited by the way our Western "civilization" is organised, as an ungeheure Warensammlung and nothing else matters.

We all watched, but we saw nothing, felt nothing but fear and the fierce

wanting not to become like them.

(: Dante's Divina Commedia)

Why am I doing this ? Because I don't believe that people with wrong thoughts

can nevertheless do right things. I'm well aware of the fact that thoughts

of a Mathematical Nature form only a negligible part of human thinking, these

days. Most of the time, our level of sophistication does not exceed the needs

of everyday Greed. Not anymore. And most of our so-called "creativity" has been

swallowed by Commercial Interests altogether. Yes, the world has become a Giant

Marketplace. Run the risk of being a subject of disdain, if you dare to attach

value to something else than Marketing and Management. Why to be a Scientist,

when you can be his Boss, huh ? But, with the commercialization of everything,

telling lies has become the standard way of communicating which

each other. Don't be silly ! Especially standard Set Theory is reflecting quite

accurately the peculiarities of Capitalist Society. What else to expect, if all

the important decisions are made by the Money that we Have, and not by the

Humans that we Are !

Function = Mapping + Time

The classical definition of a function sounds like this.

A mapping, or a

function, F from a set A to a set B is a subset of the Cartesian product:

F ⊆ A × B .

A function is thus a kind of

relationship between A and B , which has the following property, in addition:

∀ (a ∈ A) ∃! (b ∈ B) : (a,b) ∈ F

With each a element of A there exists exactly one b element of B ,

in such a way that (a,b) is an element of F.

What could be wrong with such a definition ? Let's devise a simple example, the

function y = x2 when defined at the natural numbers (: A = N).

Then form the set B, as follows:

| A | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 | 23 | 24 | 25 | . . . . . |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| B | 1 | 4 | 9 | 16 | 25 | 36 | 49 | 64 | 81 | 100 | 121 | 144 | 169 | 196 | 225 | 256 | 289 | 324 | 361 | 400 | 441 | 484 | 529 | 576 | 625 | . . . . . |

The venom is in the tail. Because, as soon as these theorists think that there is sufficient evidence, then I would shout: Give me more, give me more ! And, to satisfy my needs, they will busy again, for some . . . time. Now that's the secret ! What's lacking in the classical definition of a function is simply the fact that it costs a finite amount of time to make the calculations, I repeat: the calculations, that are needed to create the set B, with the desired numbers in it. The classical definition, on the contrary, is completely static. Not a single indication of the work, the labour, the physical effort, the dynamics of building the products in set B from the commodities in set A . Instead, the production costs are simply denied. The arduous creation of value is reduced to nothing but a timeless relationship, that simply exists. Labour is reduced to just a heap of commodities and a heap of products, without the time-consuming process in between, without the dirty smell of sweat. Considered in this manner, the classical function definition does suit well to the pipe dream of some of our enterpreneurs.

It shouldn't be surprising when such a careless and biased abstraction, sooner or later, will prove its vulnerability, in both theoretical and practical applications. But instead of considering a reform of the classical function definition, which would have been relatively simple, these theorists went on and they invented a whole bunch of "new" concepts. To mention a few: function (of course), (differential) operator, mapping, dynamic system, Turing-machine, (Markov) algorithm, lambda calculus, (Post) productions. Each concept being meant to encompass still other aspects of the same process of getting the job done. With the advent of digital computers, this bunch of function definitions has been extended once more, with nomers and mis-nomers like: subroutine, procedure, program, script, executable, command, the methods of a class. All too many names for too many, rather trivial variations on one and the same theme. Quoting a favorite mathematician, without permission: There is perhaps no better example [ ... ] of missed opportunities than in the treatment of functions.