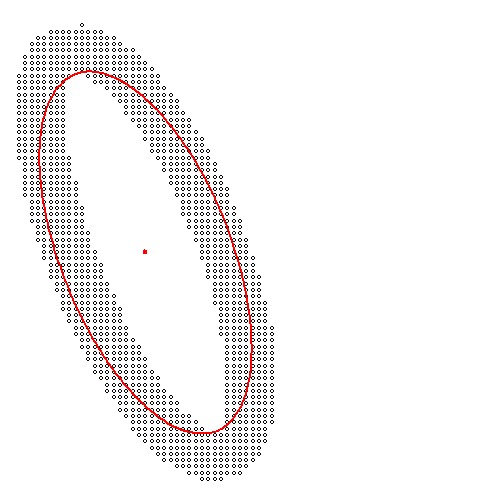

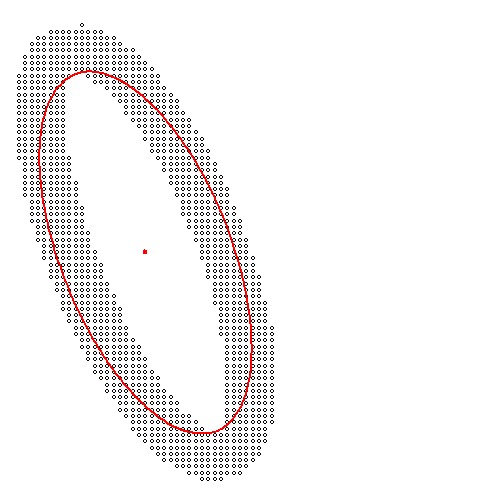

In general, the equation of a Best Fit Ellipse may be given by:

$$

\frac{1}{2}\:\frac{\sigma_{yy} (x-\mu_x)^2 - 2 \sigma_{xy} (x-\mu_x)(y-\mu_y)

+ \sigma_{xx} (y-\mu_y)^2}{\sigma_{xx}\sigma_{yy}-\sigma_{xy}^2} = 1

$$

where $(x_k,y_k)$ are the data points with weights $w_k$ in:

$$

\mu_x = \sum_k w_k x_k \quad \mbox{and} \quad

\mu_y = \sum_k w_k y_k \\

\sigma_{xx} = \sum_k w_k (x_k - \mu_x)^2 \quad \mbox{and} \quad

\sigma_{yy} = \sum_k w_k (y_k - \mu_y)^2 \\

\sigma_{xy} = \sum_k w_k (x_k - \mu_x) (y_k - \mu_y)

$$

Related:

Unfortunately, the accompanying numerical experiment reveals that practice is different from what I expected.

Now wonder what's wrong with my theory:

Delphi Pascal code and original data included.